Explaining Issues with Channel Method in LLM Prompt-based Classification

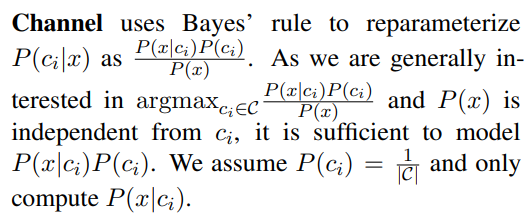

Recently, a few papers (2202.12837 Rethinking the Role of Demonstrations: What Makes In-Context Learning Work?) on LLM-prompting-based classification are using a method which the authors of these papers refer to as “Channel Method”. This idea was coined by Min et al.^[Noisy Channel Language Model Prompting for Few-Shot Text Classification], and it was explained/motivated using Bayes’ rule as follows. We believe that this explanation is not mathematically valid.

What are the direct method and channel method prompting-based classification?

In direct method of prompt-based classification, the following probability is calculated: \(P_{LLM}(class|input)\), where the input, usually in textual format, is also called a prompt. \(P_{LLM}(class|input)\) denotes the decoding probability of different class tokens (e.g. “positive” and “negative”) from the LLM after feeding in the prompt as the input. The final predicted class is \(\underset{c_i}{\operatorname{argmax}} P_{LLM}(class = c_{i}|input)\).

In channel method of prompt-based classification, we do the following comparison instead \(\underset{c_i}{\operatorname{argmax}} P_{LLM}(input|class = c_{i})\). Here, given the class information, the generation probability of the input text is compared. Note: A verbalizer is often used to convert the class to natural language descriptions (single word or small sentences). E.g., “great” or “It was great.” for “class=1” in movie review sentiment classification.

The flaw in the Bayes’ rule based explanation above is that these probability expressions are not connected by the Bayes’ rule. Let’s see why.

First, we should define all the random variables and the distribution involved.

P (Prefix): Textual input that is used to do conditional generation using the LLM. Prefix is fed as input to the LLM first.

S (Suffix): Textual target for which we want to know the decoding probability assigned by the LLM, conditioned by some prefix.

\(P_{LLM}(S|P)\): Autoregressive (or Markovian) Probability distribution modeled by the LLM network architecture and its weights. This expression is used to denote conditional decoding probability of suffix S, given the input prefix (prompt) P.

\(P_{LLM}(P)\) or \(P_{LLM}(S = p|P = [bos])\): Decoding probability of the prefix itself. Often LLM decoding is started with a special token [bos], which can be thought of as the actual prefix here.

What is the flaw in this explanation?

Implementation-wise, the difference between direct and channel method is that the values of prefix and suffix are swapped, but Bayes’ rule relates the conditional distributions where the random variables are swapped (likelihood and posterior).

Both methods, direct and channel, are computing two likelihood values \(P_{LLM}(S = class | P = input)\) vs. \(P_{LLM}(S = input | P = class)\), respectively. These two terms are not calculated by the Bayes’ rule.

Let’s write down the Bayes’ rule for conditional generation,

\[P_{LLM}(S|P) = \frac{P_{LLM}(P|S)P_{LLM}(S)}{P_{LLM}(P)}\]which should be read as,

\[P_{LLM}(Suffix|Prefix) = \frac{P_{LLM}(Prefix|Suffix)P_{LLM}(Suffix)}{P_{LLM}(Prefix)}\]We explicitly wrote down the names of random variables to avoid confusion between random variables and values, which we believe was also the source of confusion for the authors.

Note that using a forward pass, and the autoregressive assumption, we can only compute \(P_{LLM}(S|P)\) i.e. the likelihood. Using a forward pass on LLM, we cannot calculate \(P_{LLM}(P|S)\), trivially. It will be more clear, if we properly write down the meaning of the latter term.

\(P_{LLM}(P = p | S = s)\): Probability that the condition/prompt was \(p\), given that the conditioned LLM generated \(s\).